A BMW virtual driving experience powered by CAD data processed and visualized in Unreal Engine. Image courtesy of Unreal, Epic Games.

Latest News

August 1, 2018

In 1998, Epic Games released “Unreal,” a first-person shooter (FPS). The game environment was a richly textured virtual world where every rust-covered pillar, rocky cliff or cavernous cave seemed to have physical properties. Though rudimentary by today’s standards, the game included wooden crates that disintegrated when fired on, streams and creeks that flowed with radiation-bright water, and old warehouses lit with flickering torches.

The technology behind the game eventually became Unreal Engine, an interactive visualization environment available for licensing. The use of the engine is no longer confined to movies and games. Unreal Engine now powers, among other things, bus simulators, architectural visualization and digital twins of real museums.

“In an interactive environment like ours, developers can leverage the animation tools and physics engine to create kinematic behaviors. We’re starting to see a lot of people using the Unreal Engine for collaborative VR,” says Marc Petit, GM for Unreal Engine Enterprise, Epic Games.

Twenty years ago, as FPS games went online, gamers jumped into Unreal Tournament to wage multiplayer battles against one another. Today, engineers and architects are remotely logging into virtual reality (VR) apps powered by the game engine, not for combat but for collaboration. As Petit sees it, game engines are now the aggregation platforms where CAD, VR, sensor data and enterprise data intersect.

Led by Automotive

For the data conversion, REWIND relied on Datasmith and Unreal Studio. “So what used to take three of four weeks for my guys, now just takes a few hours,” says Rogers.

In July 2017, Epic Games released Datasmith, a tool kit for importing CAD data into the Unreal Engine. Previewed at the SIGGRAPH 2017 Unreal Engine User Group, the debut version of Datasmith supported about 20 popular CAD formats and standard 3D formats, including CATIA, Siemens NX, SOLIDWORKS, Autodesk Inventor, STL and STEP.

“A lot of our customers use the Datasmith technology to automate the process of creating digital twins. Car companies are leading this,” notes Petit.

Simple, Fast Conversion

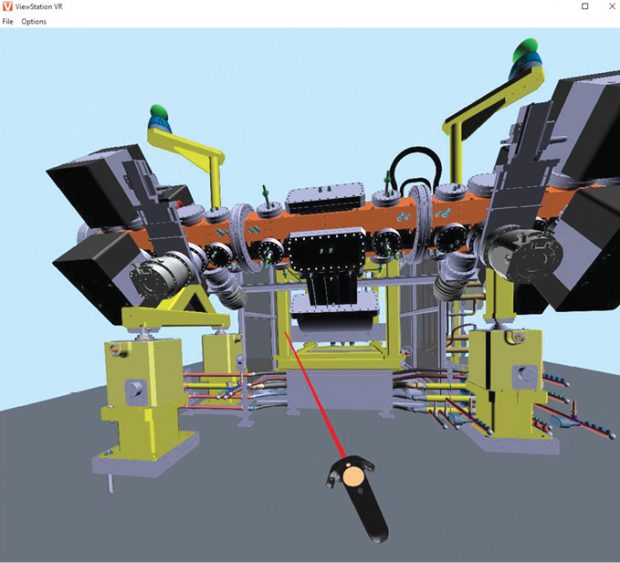

Germar Nikol and his colleagues at KISTERS developed a 2D and 3D CAD file viewer called 3DViewStation. Recently, they decided to bring their product to the next level by updating it with VR functions.

The multiple steps required to bring automotive CAD data into VR can “take up to one week,” estimates Nikol, KISTERS’ director of 3D Visualization Technologies. “Since engineering data is changing all the time, you’d need to repeat these steps when you need to bring in an updated model again.”

3DViewStation doesn’t use a game engine, but its own kernel for visualization and VR display, Nikol says. “Our strength is that we can read all the native CAD file formats and standard 3D formats instantly, and that we are bringing typical engineering functionalities like measurement, sectioning, wall thickness analysis and digital mockups to the VR environment,” he adds. The 3DViewStation runs on Windows Mixed Reality and Valve’s SteamVR platform. Compatible VR headsets include HTC VR, Lenovo Explorer, Dell Visor and HP Mixed Reality.

KISTERS’ viewer is available as a desktop program, a browser-based application or a VR edition. “With our 3DViewStation, you can load native CAD files directly,” says Nikol. “You can also perform batch processing using our desktop product and load the file—this will load much faster. You can use our desktop product to prepare data, like doing some analysis beforehand or making the model beautiful for marketing, and store them as views inside the 3DViewStation file. Finally, you can also have the product lifecycle management (PLM) system drive the web viewing.”

The last method enables you to display metadata in the VR view, or use VR as a product configurator that brings up different variants and components on demand, Nikol says.

From CAD to VR

3D CAD modeling programs used by engineers run on kernels that focus on geometry construction and precision, and less on photorealistic visualization. Therefore, most engineering-centric VR applications rely on game engines, such as Unity or Unreal, to enable visualization. The main issues in the CAD-to-VR pipeline are (1) the need to convert the CAD data into a game engine-compatible, VR-ready format and (2) the need to reduce the level of details in the rich engineering model into a lighter, nimbler model.

“Unity and all game engines use tessellated surfaces internally rather than B-rep geometry, so there aren’t solids in a game engine,” explains Ed Martin, senior technical product manager at Unity. “Game engines and real-time 3D experience engines work with tessellated data, so at some point in the process before loading data to the graphics processing unit (GPU), the model needs to be tessellated.”

Unity’s bridge to CAD is a partner product called PiXYZ. “Most CAD data formats can be loaded into the Unity engine with PiXYZ,” says Martin. “The tessellation is performed as part of the process of importing and optimizing the data. There is always some inherent geometrical approximation whenever data is tessellated, but PiXYZ understands the original CAD patches and is able to produce an excellent tessellated model that is accurate even where fine features exist in the original design.”

From CAD to AR

In immersive VR apps, the high-res photo-real imagery takes center stage. On the other hand, in AR, gesture-driven natural interaction takes precedence. The way you rotate a digital object using your fingers, or the way you poke a virtual button to activate certain operations—these generally define AR functions.

The biggest challenge of 3D CAD integration into an AR experience is “format compatibility,” notes Abraham Georgiadis, AR developer ManoMotion’s UX designer. “I personally believe that since we are already following a format agnostic approach in other content cases, the 3D CAD format should be treated as such,” he says.

The computer screen is simply not up to the task when it comes to merging pixels with reality. That’s something AR applications are better positioned to do, Georgiadis notes. Take automotive design, for instance. “Wouldn’t it be much more beneficial to project the design of a car in a real-world setting, like a garage or the road, and inspect it for the criteria that have been set?” he asks.

“We could take the next step and test our initial assumptions ... Does this car have enough space for the driver to feel comfortable?” Georgiadis says, imagining the possibilities in AR. “Are the aerodynamics in place? What would happen if we swapped the model of the engine for the next one?” These tests are only possible if the human, the real environment and the digital design can share the same space—something AR can facilitate.

Being Natural Takes Time

Although AR is introducing a more natural physical-digital interaction (using fingers, hands and eyes to interact with digital objects), ironically, for the generations already trained on mice and keyboards, the new paradigm may feel “unnatural” for a while.

“We now have multiple examples of positional tracking technologies, which is fundamental in AR. The next logical step is adding natural interaction with digital overlay,” says Georgiadis. That means, in AR, pixels defined as rubber, glass or steel would look, feel and react like those materials. With the use of physically-based rendering (PBR) materials in AR, the realism in the materials’ look is unprecedented, especially in the way they react to light. But the feel remains a puzzle for AR developers to solve.

Managing the Level of Details

Generally, the purpose of a VR model is to allow someone—a customer, client or remote collaborator—to experience the design. Some essential details in the manufacturing CAD model (nuts, bolts, brackets and holes, for examples) are not critical for VR. Hence, the need to judiciously parse the CAD model in preparation for the VR session.

With Unreal Engine’s scripting, users can specify that features below a certain threshold be removed (for example, holes with 0.5-m diameter), according to Petit. “So when the original engineering file is updated, the automation script can kick off to execute the same routines to produce a new VR object,” he points out.

“PiXYZ can optimize a model to fit a target use case and device platform,” says Martin. “For example, an engineer sectioning a design in VR with a high-end workstation will want a highly accurate model with all interior details. By comparison, an AR car configurator running on a mid-spec smartphone will need the model stripped down so that hidden components are removed and surfaces decimated to maintain performance. PiXYZ provides features to do all of these optimizations, and the process can be automated with scripted scenarios.”

As efficient as these algorithms and tools are, human judgment is still required. “The [CAD-to-VR] transformation requires decisions about how to optimize and reduce the amount of information, and those decisions are best done by humans who understand VR rendering hardware and software, as well as the use case for the simulation,” says Matthias Pusch, head of global sales and marketing for VR collaboration app developer WorldViz. As he sees it, a one-click button that can account for the myriad conversion decisions is highly unlikely.

Digging Up the Hidden Kinematics

Most CAD assemblies have joints and connectors (welds, bolts or rotation, for example) that define the design’s possible movements and degrees of freedom. The VR models’ value in simulation and testing will significantly increase when they can inherit their CAD ancestors’ mechanical behaviors. Reproducing the assembly’s kinematic behaviors with accuracy requires the VR program to have access to this info, buried deep inside the CAD model.

“CAD programs like Autodesk Inventor or SOLIDWORKS don’t expose their constraints system,” Petit points out. If the original assembly is a plane’s landing gear, “we don’t have access, for example, to the hierarchy of constraints that define a landing gear’s motions. We’re talking to the vendors to let us have access to the constraints so we can reproduce the assembly motions,” he adds.

For now, for the VR apps to reproduce the original design’s kinematic constraints, “it often requires custom development in order to get the desired results,” says Pusch. “For WorldViz’s VR creation and collaboration software Vizible, we’ve built inverse kinematics systems for various types of equipment so that people can come together in VR and realistically see how operating room equipment moves through a particular space.”

WorldViz uses the proprietary Vizard simulation engine, which supports OpenSceneGraph and Python Scripting. The upcoming version of Vizard (due out in summer) will let users employ PBR shaders. It will also support the GL Transmission Format (glTF) for 3D models, according to Pusch. “Vizard has been around for 16 years as a workhorse for many industrial and academic R&D labs,” he adds.

SOLIDWORKS Visualize VR

In late 2017, SOLIDWORKS, one of the widely used mechanical CAD modelers, introduced a VR content publishing feature in its rendering program SOLIDWORKS Visualize Professional. In the step-by-step blog post guide to using this feature, SOLIDWORKS Product Manager Brian Hillner wrote: “Visualize 2018 allows you to create ‘360’ cameras, which render out a flattened-spherical image, changing how you design, develop and deliver your products. This image can then be viewed with any VR headset—from HTC Vive all the way to even a $15 Google Cardboard.”

As more design software makers consider their clients’ appetite for VR design reviews, they may begin adding easy VR-content creation tools into the modeling programs.

Trained on the Digital Twins

J.C. Kuang, an analyst from Greenlight Insights, oversees an initiative that tracks roughly 100 enterprise AR-VR software solutions providers to gauge the emerging market. He’s the author of the Greenlight Insights report titled “XR in Enterprise Training,” covering the use of AR-VR as safe, cost effective, engaging and efficient in training platforms.

“In Western Europe, traditionally the hotbed of manufacturing and logistics operations, we’ve seen significant adoption of AR,” notes Kuang. Early adopters seem to focus on AR chat, remote assistance, remote expert help, augmented annotation and training, he adds.

“To some observers, it might appear that AR is only good for a handful of scenarios, but we see this differently,” says Kuang. “It means the market for enterprise AR is mature enough so that solutions providers have figured out the core things AR is best positioned to solve.”

Kuang also notes the rise of specialized solution providers, breaking away from general-purpose one-size-fits-all AR. “If you’re in logistics, you go to this AR provider. If you’re in food service, you go to that one. If warehousing, then another one, and so on,” he explains.

Some of the most sophisticated AR training applications are spearheaded by the military, according to Kuang’s research. “The military would usually contract five or six different component providers to help them build a virtual cockpit with ultra-high-res 4K textures with bespoke hardware that emulates switches and throttles. The goal is to supplement and eventually replace the traditional simulator rigs,” he says.

A Short-Lived Window of Opportunity

The current CAD-to-VR pipeline and AR-VR apps publishing leave gaps for smaller, specialized vendors to operate in. But that window of opportunity may not remain open for long, Kuang warns.

“Some hardware makers will begin to develop their own software solutions to address the common use cases. That’s one way the industry might consolidate,” he says. “They’d want to be able to say they can provide you end-to-end AR, so you don’t need to go to a third-party provider. So these other providers need to use the time they have to uncover new usages, specialized applications that are too vertical to draw the hardware maker’s interest.”

Currently, many manufacturing firms think of implementing digital twins after the fact, at the end of the design development where the 3D assets are readily available. But Petit suggests another approach.

“Do not wait till you need a digital twin to build one,” he says. “Build it into your design process, into your product data management system. Build the data preparation efforts. Then the value of the digital twin increases, because any time you want to, you can launch the digital twin.”

For More Info

Subscribe to our FREE magazine, FREE email newsletters or both!

Latest News

About the Author

Kenneth Wong is Digital Engineering’s resident blogger and senior editor. Email him at kennethwong@digitaleng.news or share your thoughts on this article at digitaleng.news/facebook.

Follow DE